Today NVIDIA released its quarterly results for the second quarter of their fiscal year 2016 (yes, 2016) and they had excellent sales of their GeForce GPUs, but have decided to write down their Icera modem business, which hit their operating expenses to the tune of around $90 million. Revenue for the quarter was up 5% though as compared to Q2 2015, and came in at $1.153 billion for the quarter. On a GAAP basis, gross margin was 55%, down 110 bps over last year and down 170 bps since last quarter. Net income was just $26 million, down 81% sequentially and 80% year-over-year. This resulted in diluted earnings per share of $0.05, down 77% from Q2 2015’s $0.22.

Today NVIDIA released its quarterly results for the second quarter of their fiscal year 2016 (yes, 2016) and they had excellent sales of their GeForce GPUs, but have decided to write down their Icera modem business, which hit their operating expenses to the tune of around $90 million. Revenue for the quarter was up 5% though as compared to Q2 2015, and came in at $1.153 billion for the quarter. On a GAAP basis, gross margin was 55%, down 110 bps over last year and down 170 bps since last quarter. Net income was just $26 million, down 81% sequentially and 80% year-over-year. This resulted in diluted earnings per share of $0.05, down 77% from Q2 2015’s $0.22.

A big factor in this was the write down of their Icera modem division. NVIDIA had been looking for a buyer for their modem unit, but was unable to find a suitable buyer for the business and is therefore winding down operations in this unit. This caused a hit of $0.19 per diluted share. Also during the quarter, NVIDIA announced a recall of their SHIELD tablets due to overheating batteries, and there have been two cases of property damage due to this. This caused another hit of $0.02 per diluted share. They also had $24 million in expenses related to the Samsung and Qualcomm lawsuit.

NVIDIA’s non-GAAP results “exclude stock-based compensation, product warranty charge, acquisition-related costs, restructuring and other charges, gains and losses from non-affiliated investments, interest expense related to amortization of debt discount, and the associated tax impact of these items, where applicable” which means that they do not reflect either the Icera write-down, nor the tablet recall. On a non-GAAP basis, gross margin was up 20 bps to 56.6%, with net income up 10% to $190 million. Diluted earnings per share were $0.34, up 13% from Q2 2015’s $0.30 non-GAAP numbers. Despite a significant write-down and a recall, the core business is still doing very well.

For the quarter, NVIDIA paid out $52 million in dividends and repurchased $400 million in stock.

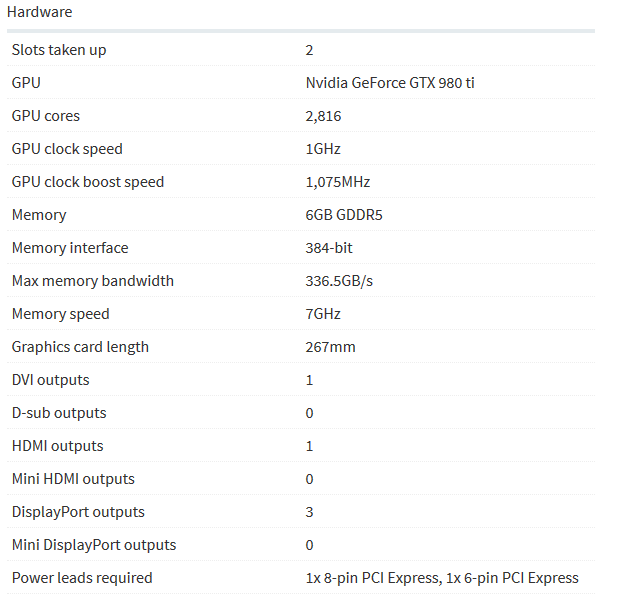

What is driving growth right now is its GPU business. Revenue for GeForce GPUs grew 51%, and NVIDIA has continued to see strength in the PC gaming sector. Fueled by the release of the GTX 980 and GTX 980 Ti, sales of high-end GTX GPUs “grew significantly” year-over-year. The Titan X would certainly fall in there as well, although unlikely at as high of volume. Maxwell has been a very strong performer, and gamers tend to go where the performance is. Souring the results somewhat is a decline in Tesla GPU sales, as well as Quadro GPU sales. Overall, GPU revenue was up 9% year-over-year to $959 million. Even as NVIDIA has tried to diversify with SoCs, their GPU business is still almost 85% of the company.

NVIDIA has found a niche in the automotive infotainment world, and that that area is still strong for them. Tegra has not taken off in the tablet or smartphone space in any meaningful way, but there was still growth in the automotive sales for Tegra. Overall Tegra processor revenue was down 19% year-over-year, which is mainly due to Tegra OEM smartphones and tablets. NVIDIA’s own Tegra sales in the Shield helped offset this loss somewhat, but as the recall filings showed, they only sold 88,000 SHIELD tablets. Margins are likely helped by the fact that they run their own SoC in it though.

NVIDIA’s “Other” segment is a fixed 66 million licensing payment from Intel, and as always, that is flat and does not change. This is from the 2011 settlement of a licensing dispute, and will end in 2017.

For the quarter, NVIDIA paid out $52 million in dividends and repurchased $400 million in stock.

What is driving growth right now is its GPU business. Revenue for GeForce GPUs grew 51%, and NVIDIA has continued to see strength in the PC gaming sector. Fueled by the release of the GTX 980 and GTX 980 Ti, sales of high-end GTX GPUs “grew significantly” year-over-year. The Titan X would certainly fall in there as well, although unlikely at as high of volume. Maxwell has been a very strong performer, and gamers tend to go where the performance is. Souring the results somewhat is a decline in Tesla GPU sales, as well as Quadro GPU sales. Overall, GPU revenue was up 9% year-over-year to $959 million. Even as NVIDIA has tried to diversify with SoCs, their GPU business is still almost 85% of the company.

NVIDIA has found a niche in the automotive infotainment world, and that that area is still strong for them. Tegra has not taken off in the tablet or smartphone space in any meaningful way, but there was still growth in the automotive sales for Tegra. Overall Tegra processor revenue was down 19% year-over-year, which is mainly due to Tegra OEM smartphones and tablets. NVIDIA’s own Tegra sales in the Shield helped offset this loss somewhat, but as the recall filings showed, they only sold 88,000 SHIELD tablets. Margins are likely helped by the fact that they run their own SoC in it though.

NVIDIA’s “Other” segment is a fixed 66 million licensing payment from Intel, and as always, that is flat and does not change. This is from the 2011 settlement of a licensing dispute, and will end in 2017.

For Q3 2016, NVIDIA is expecting revenue to be $1.18 billion, plus or minus 2%, with margins of 56.2% to 56.5%.

NVIDIA is obviously a giant in the GPU space, and that is going very well for them. Sales are very strong, and PC gaming has been a strong point in an otherwise weakening PC market. They are attempting to diversify to mobile, but have found out just how difficult that can be, and had to write down their modem division completely. Without a good integrated modem, it will be difficult to gain traction in the smartphone space, but NVIDIA’s current SoC offerings don’t seem well suited to smartphones anyway. Their strength in GPU knowledge has certainly helped them with the GPU side of the equation, but their first attempt at CPU design has not been as strong. We shall see what their plans are for the SoC space going forward, but for now they are riding a wave of strong GPU sales, and that is a good thing for NVIDIA.

NVIDIA is obviously a giant in the GPU space, and that is going very well for them. Sales are very strong, and PC gaming has been a strong point in an otherwise weakening PC market. They are attempting to diversify to mobile, but have found out just how difficult that can be, and had to write down their modem division completely. Without a good integrated modem, it will be difficult to gain traction in the smartphone space, but NVIDIA’s current SoC offerings don’t seem well suited to smartphones anyway. Their strength in GPU knowledge has certainly helped them with the GPU side of the equation, but their first attempt at CPU design has not been as strong. We shall see what their plans are for the SoC space going forward, but for now they are riding a wave of strong GPU sales, and that is a good thing for NVIDIA.